Insight

AI Agents in Patient Access: What’s Real, What’s Hype, and Why It Matters

AI Agents for Patient Access: What’s Real, What’s Hype, and Why It Matters

AI is everywhere in healthcare marketing right now.

But here’s the uncomfortable truth:

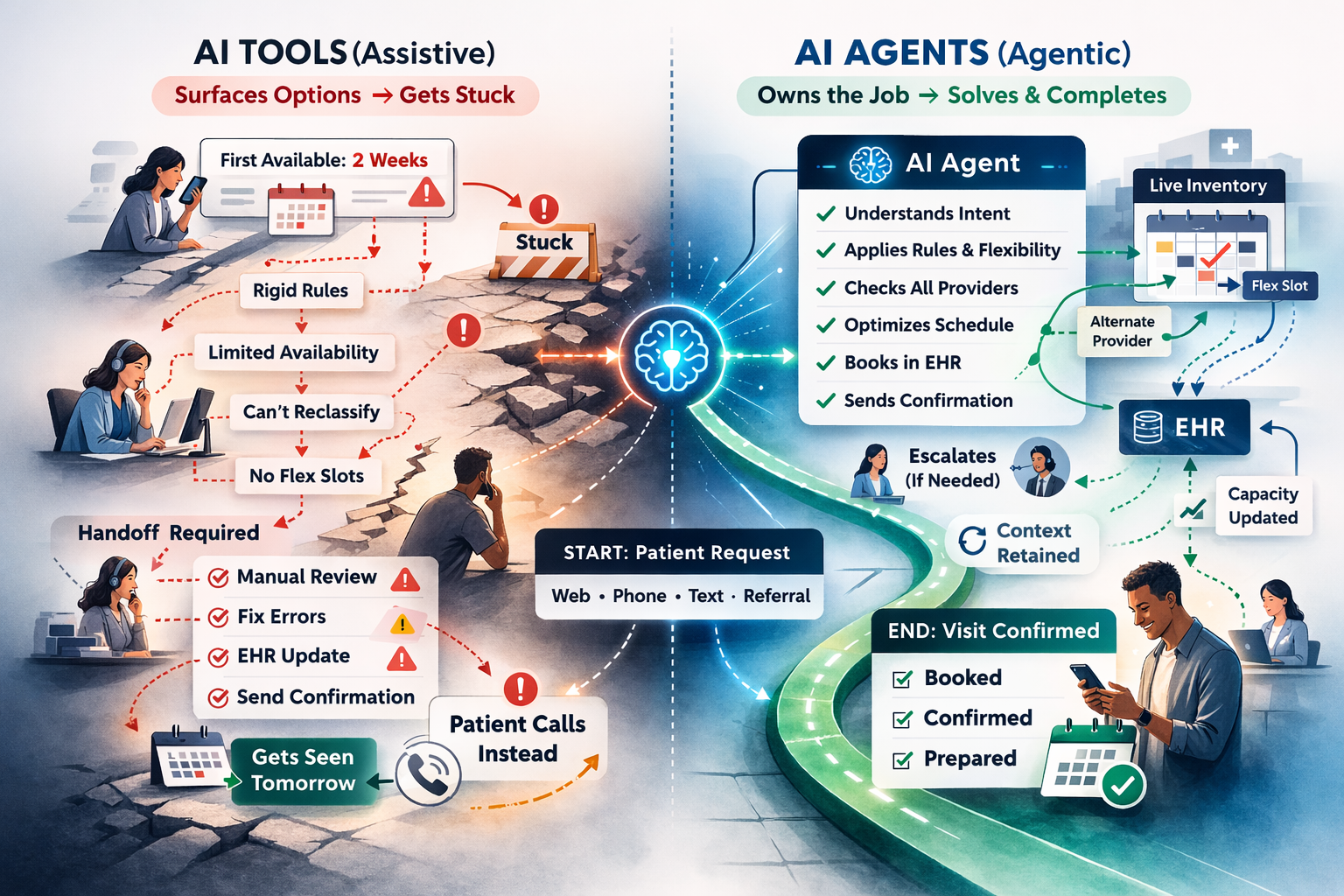

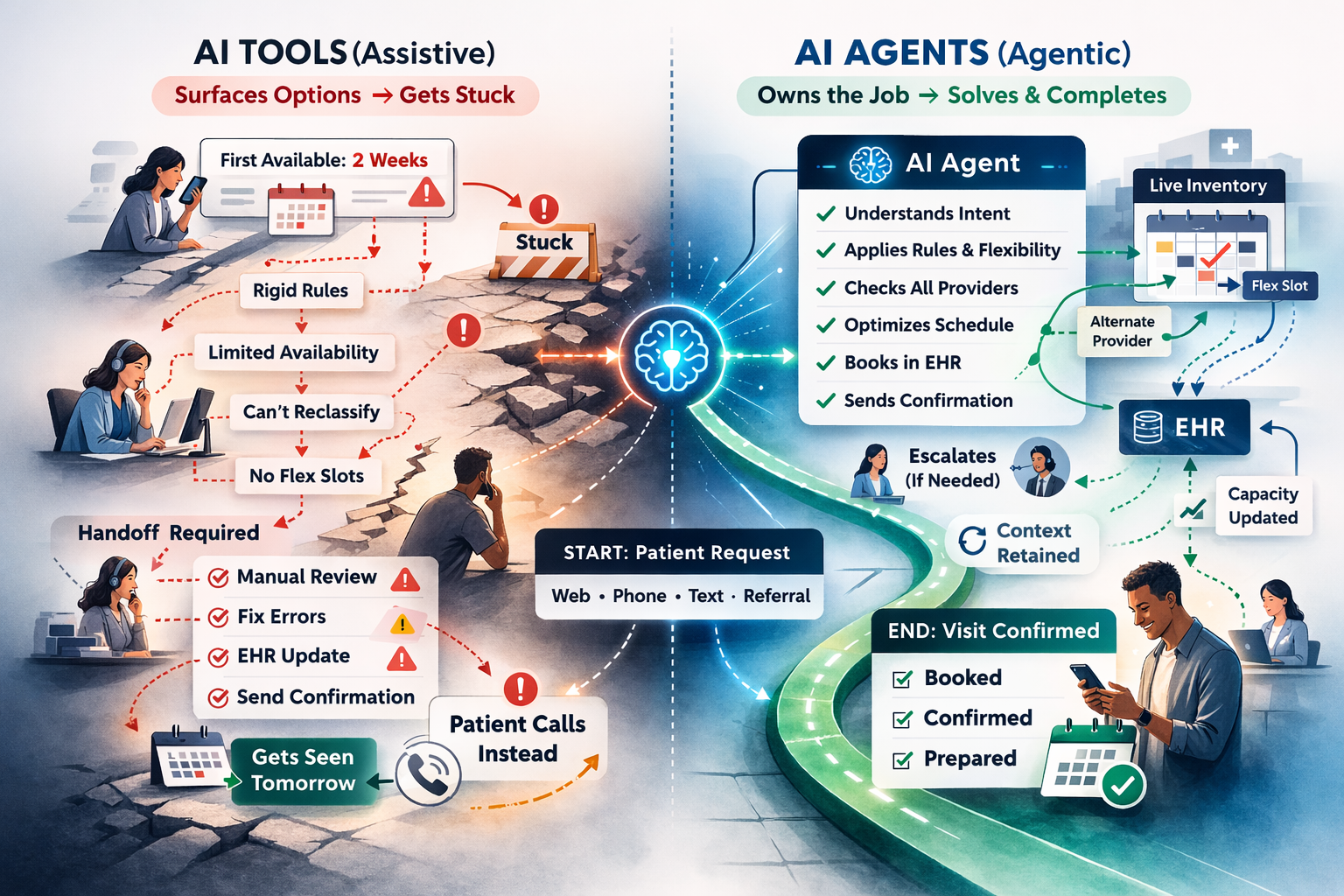

Most of what’s being called an “AI agent” in patient access isn’t actually agentic. It’s assistive. And in a system where the front door determines revenue, patient satisfaction, and staff burnout, that distinction isn’t academic. It’s operational.

First: What Is an AI Agent?

An AI agent is not just software with AI inside. It’s a virtual worker.

It has:

• Authority to act

• Responsibility for outcomes

• The ability to reason dynamically

• Clear boundaries

• The capacity to complete a job end-to-end

If it produces output that someone else has to fix, finalize, or reconcile, it’s not an agent. It’s just a tool. Is it helpful? Sometimes... Transformational? Rarely.

Patient Access Is a Journey, Not a Feature

The “front door” in healthcare is the full non-clinical journey:

First contact → scheduling → referral intake → reminders → visit readiness.

It’s fragile.

Dropped calls.

Unfinished bookings.

Referrals that stall.

Patients who never quite make it to care.

You don’t fix that with isolated features. You fix it with ownership.

The Manual Cleanup Test

Let’s start simple with a patient using an AI scheduling system. Those systems:

• Collects preferences

• Surfaces appointment options

• Drafts a booking

But many times a staff member has to come behind it and:

• Review it

• Enter it into the EHR

• Fix errors

• Send confirmation

That is not the power of a real agent.

A real scheduling agent:

• Determines the correct visit type

• Books directly into the system of record

• Updates capacity

• Sends confirmation

• Knows when to escalate

• Preserves context if the patient drops and returns

The job is not complete until:

1. The action is executed

2. The system is updated

3. The patient has confirmation

If any of those require manual intervention, the AI didn’t own the job.

The Harder Problem: When Complexity Breaks the System

Manual cleanup is one failure mode. But there’s a more subtle and more damaging one. Imagine this scenario.

A patient goes online to schedule. The system says... “The first available appointment is in two weeks.”

Frustrated, the patient calls the clinic. The call center rep gets them in tomorrow. What happened? The digital tool wasn’t wrong. It was limited.

A human agent:

• Looked across providers

• Adjusted appointment types

• Used held or flex slots

• Interpreted urgency

• Understood the context of the complex rules

• Coordinated internally

The AI system just read in the exposed availability. It couldn’t solve for situational complexity. And when patients learn that calling gets better results than digital, trust erodes instantly.

Tools Surface Options. Agents Solve Problems.

Most AI-powered scheduling systems operate within predefined constraints.

They can:

• Read calendar availability

• Match basic rules

• Follow scripted flows

But they cannot:

• Reclassify urgency based on patient language

• Explore cross-provider flexibility

• Optimize for continuity of care

• Navigate specialty-specific constraints

• Balance access rules dynamically

• Create tasks when escalation is needed

So when friction appears, the system collapses into rigidity. It becomes a calendar reader. Not a capacity optimizer.

The Real Standard: Perform Like a Strong Human Rep

The benchmark for an AI agent in patient access is simple: Does it perform at least as intelligently as your best front-desk team member?

If a human consistently outperforms the AI in edge cases

• When urgency is ambiguous

• When schedules are tight

• When appointment types don’t perfectly match

• When referrals are incomplete

Then the AI isn’t agentic. It’s scripted automation. And scripted automation doesn’t own the front door.

Agency Requires More Than AI Models

Solving for true agency requires:

• Deep integration into systems of record

• Specialty-level configuration

• Standardized but flexible workflows

• Clear escalation design

• Authority within guardrails

• Supervisable performance

• Measurable completion metrics

This isn’t about adding AI to a workflow. It’s about redesigning the operating model so the AI Workforce actually carries responsibility. That’s much harder than building a chatbot. Which is why many vendors avoid it.

The Ownership Test

There are two simple tests for whether something is truly agentic in patient access:

1. The Completion Test:

Does the system complete the job end-to-end without human cleanup?

Not partial progress.

Not draft output.

Completion.

2. The Complexity Test

When scenarios get messy with limited availability, urgency ambiguity, and specialty nuance, does the system intelligently navigate within guardrails?

Or does it stall, default, or force the patient to call?

If patients routinely say, “I’ll just call,” your AI isn’t owning access. It’s deferring it.

Why This Matters Economically

Assistive tools reduce effort. True agents change structure.

Only agentic systems can:

• Lower cost per interaction

• Increase completion rates

• Reduce dropped access jobs

• Decrease human intervention per case

• Improve visit readiness before staff engagement

If your AI still requires human oversight at scale, your cost structure hasn’t changed. You’ve just added software. Ownership is what unlocks structural leverage.

Bottom Line

An AI system in patient access is agentic only if it:

• Completes jobs end-to-end

• Reasons dynamically through complexity

• Optimizes within defined boundaries

• Escalates intentionally, not by default

• Preserves context across interactions

• Performs comparably to strong human staff

If it only works when the scenario is clean and linear. It’s not an agent. It’s a smart form. And smart forms don’t fix the front door. Accountable digital workers do.

More Articles

Insight

AI Agents in Patient Access: What’s Real, What’s Hype, and Why It Matters

Insight

The Complete Guide to Conversational AI Agents for Patient Access

Insight

Designing responsible guardrails for Healthcare AI agents