Insight

Designing responsible guardrails for Healthcare AI agents

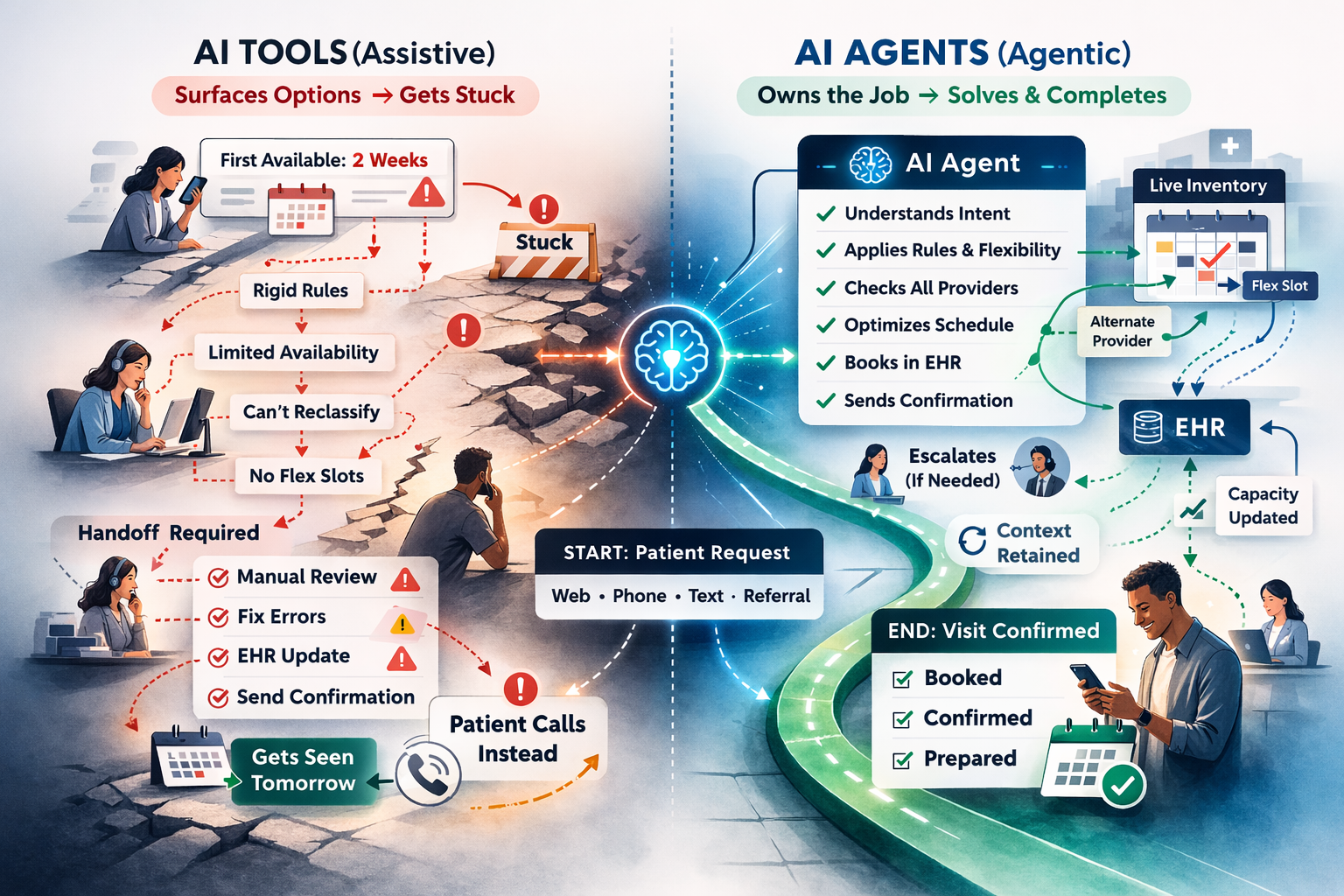

When AI agents sit on the front lines of patient access, they’re not “just software.”

They’re the new front desk, the new call center rep, and the first voice a patient hears when they need help.

That means they must be:

- Accurate

- Safe

- Compliant

- On-brand

- Humble enough to escalate when it’s not the right tool

In healthcare, trust isn’t a nice-to-have. It’s the whole game.

At Health Note, we’ve built our conversational AI platform with layered guardrails at every stage of the patient interaction—before, during, and after the conversation. These guardrails help ensure the AI stays grounded, follows operational rules, avoids hallucinations, handles sensitive situations appropriately, and escalates cleanly to a human when needed.

The result: AI agents that can scale access without scaling risk.

Before the conversation: Preventing issues with regression testing

Trust starts before the first patient ever calls. Thats why before deploying a Health Note AI agent into production, teams can run it through structured testing designed to catch failures early and validate predictable behavior.

This includes both single-turn and multi-turn test flows, covering everything from basic FAQs to complex workflows like:

- Scheduling a new appointment

- Rescheduling or canceling

- Routing to the right clinic or specialty

- Medication refill intake

- Billing questions and payment support

A key best practice: replaying real historical interactions against updated agent logic to confirm you’re improving performance without introducing new failures. This matters because LLM-based systems can be non-deterministic—meaning changes can have unintended consequences if you’re not testing aggressively.

During the conversation: Real-time guardrails without breaking speed

Once the conversation starts, the stakes go way up. The AI is now representing your organization in real time.

It needs to respond with:

- Accuracy

- Consistency

- Care

- Zero ego

That’s why Health Note enforces layered, real-time guardrails designed to protect patients, staff, and your brand—without turning every interaction into a slow, robotic mess.

Some safeguards run in parallel with response generation to minimize latency. Others evaluate the final response right before it’s delivered, gating actions when necessary.

Fast and safe. That’s the standard.

Guardrail #1: Bad actor detection (misuse + manipulation protection)

Not every caller is acting in good faith.

Some users will try to:

- Trick the agent into doing something it shouldn’t

- Force it off-topic

- Get it to reveal sensitive information

- Push it into unsafe medical territory

Health Note includes bad actor detection that evaluates intent early and continuously.

When flagged, the agent can:

- Deflect with a neutral, safe response

- Restrict certain actions

- Escalate to a human immediately

This prevents unsafe or brand-damaging conversations before they spiral.

Guardrail #2: Clinical safety boundaries (no guessing, no improvising)

Healthcare has a hard line:

If it’s clinical, urgent, or ambiguous — don’t wing it.

Health Note agents are designed to recognize when a request crosses into clinical territory, including:

- Symptom descriptions (“chest pain,” “shortness of breath”)

- Medication side effects

- Urgent care needs

- Medical advice requests

In these cases, the AI can:

- Provide safe guidance (e.g., recommend appropriate escalation)

- Route to the right team

- Avoid pretending it’s a clinician

This is how you reduce risk and protect patients.

Guardrail #3: Human escalations (clean handoff, full context)

Some conversations should never be fully automated.

Health Note supports configurable escalation rules so the AI knows when to defer to a human—like when the patient is:

- Upset or emotionally distressed

- Dealing with a sensitive billing situation

- Requesting something outside policy

- Asking for clinical advice

- Stuck in a loop

- Simply asking for a person

These rules can be evaluated during the conversation, and when triggered, the agent hands off gracefully with:

- A summary of the situation

- What the patient is trying to do

- What’s already been collected

- What actions were attempted

No repeat-yourself nightmare, just momentum.

Guardrail #4: Brand voice + patient-friendly communication

Healthcare language can get cold fast.

Patients don’t want a robot that sounds like a policy manual.

They want clarity. Calm. Confidence.

Health Note agents can be configured to match your organization’s voice, whether that means:

- Warm and reassuring

- Clear and direct

- Low jargon

- Culturally sensitive

- Accessible reading level

- Bilingual or multilingual support

This ensures every interaction feels like a real extension of your team—not a generic chatbot with a stethoscope sticker on it.

Guardrail #5: Response supervision (hallucination prevention + grounding)

Before any message is delivered, Health Note uses response supervision to validate that the output is:

- Relevant to the patient’s intent

- Grounded in trusted information

- Within approved boundaries

- Not inventing details

- Not overpromising actions it can’t take

If the system detects risk (hallucination, uncertainty, policy violation), it can:

- Revise the response

- Ask a clarifying question

- Restrict action-taking

- Escalate to a human

The goal is simple:

Plausible isn’t good enough. It has to be correct.

After the conversation: Closing the loop with continuous QA + monitoring

Trust doesn’t end when the call ends. In healthcare, post-conversation review is one of the most powerful ways to improve safety, accuracy, and operational performance over time.

Health Note supports always-on monitoring and QA workflows so teams can review conversations at scale—not by random sampling, but by actually catching what matters.

Instead of keyword-only alerts, monitoring can detect patterns like:

- Patient frustration

- Repeated failure points

- Escalation triggers firing too late

- Workflow drop-offs (e.g., scheduling attempts not completed)

- Confusing or outdated information

- Trends by clinic/location/service line

This helps your team tighten the system weekly without adding manual overhead.

End-to-end guardrails for safe, scalable healthcare AI agents

Conversational AI can be a massive unlock for access and operational efficiency. But only if it’s reliable. Only if it knows its boundaries. Only if it protects trust like it’s sacred.

Health Note delivers layered guardrails across the full lifecycle:

Before the conversation: regression + integration testing

During the conversation: real-time safety + escalation + supervision

After the conversation: monitoring + QA feedback loops

That’s how you scale AI agents across healthcare contact centers and front desks without scaling risk.

Want to see Health Note’s guardrails in action?

If you’re building the future of patient access, let’s make sure it’s one you can trust.

Want a demo? Let’s do it.

More Articles

Insight

AI Agents in Patient Access: What’s Real, What’s Hype, and Why It Matters

Insight

The Complete Guide to Conversational AI Agents for Patient Access

Insight

Designing responsible guardrails for Healthcare AI agents